Bright Ideas, Big City

20 maj 2010 | In Meta-ethics Moral Psychology Naturalism Psychology Self-indulgence | Comments?Tomorrow, I’m giving a short presentation at a lab meeting with the sinisterly named MERG (Metro Experimental Research Group) at NYU. The title is ”Value-theory meets the affective sciences – and then what happens?”. For once, the question tucked on for effect at the end will be a proper one (normally when using this title, I just go ahead and tell the participants what happens). I really want to know what should happen, and how the ideas I’ve been exploring could be translated into a proper research program. I’m constantly finding experimental ”confirmation” of my pet ideas from every branch of psychology I dip my toes in, but there are obvious risk with this way of doing ”research”. The question is whether, and how, those ideas might actually help design new experiments and studies more suited to confirm (or disconfirm) them.

I believe meta-ethics could and should be naturalized, and I have certain ideas about what would happen if it were. Now, we prepare for the scary part.

Moral Babies

8 maj 2010 | In Books Emotion theory Moral Psychology Naturalism parenting Psychology Self-indulgence | Comments?

The last few years have seen a number of different approaches to morality become trendy and arouse media interest. Evolutionary approaches, primatological, cognitive science, neuroscience. Next in line are developmental approaches. How, and when, does morality develop? From what origins can something like morality be construed?

Alison Gopnik devoted a chapter of her ”the philosophical baby” to this topic and called it ”Love and Law: the origins of morality”. And just the other day, Paul Bloom had an article in the New York Times reporting on the admirable and adorable work being done at the infant cognition center at Yale.

Basically, we used to think (under the influence of Piaget/Kohlberg) that babies where amoral, and in need of socialization in order to be proper, moral beings. But work at the lab shows that babies have preferences for kind characters over mean characters quite early, maybe as early as age 6 months, even when the kindness/meanness doesn’t effect the baby personally. The babies observe a scene in which a character (in some cases a puppet, in others, a triangel or square with eyes attached) either helps or hinders another. Afterwards, they are shown both characters, and they tend to choose the helping one. Slightly older babies, around the age of 1, even choose to punish the mean character. Bloom’s article begins:

Not long ago, a team of researchers watched a 1-year-old boy take justice into his own hands. The boy had just seen a puppet show in which one puppet played with a ball while interacting with two other puppets. The center puppet would slide the ball to the puppet on the right, who would pass it back. And the center puppet would slide the ball to the puppet on the left . . . who would run away with it. Then the two puppets on the ends were brought down from the stage and set before the toddler. Each was placed next to a pile of treats. At this point, the toddler was asked to take a treat away from one puppet. Like most children in this situation, the boy took it from the pile of the “naughty” one. But this punishment wasn’t enough — he then leaned over and smacked the puppet in the head.

In a further twist on the scenario, babies (at 8 months) where asked to choose between still other characters who had either rewarded or punished the behavior displayed in the first scenario. In this experiment, the babies tended to go for the ”just” character. This is quite amazing, seeing how the last part of the exchange would have been a punishment (which is something bad happening, though to a deserving agent.) It takes quite extraordinary mental capacities to pick the ”right” alternative in this scenario.

If babies are born amoral, and are socialized into accepting moral standards, something like relativism would arguably be true, at least descriptively. Descriptively, too, relativism often seem to hold: we value different things and a lot of moral disagreement seems to be impossible to solve. In some moral disagreement, we reach rock-bottom, non-inferred moral opinions and the debate can go no further. This is what happens when we ask people for reasons: they come to an end somewhere, and if no commonality is found there, there is nothing less to do.

A common feature of the evolutionary, biological, neurological etc. approaches to morality is that they don’t want to leave it at that. If no commonality is found in what we value, or in the reasons we present for our values, we should look elsewhere, to other forms of explanations. We want to find the common origin of moral judgments, if nothing else in order to diagnose our seemingly relativistic moral world. But possibly, this project can be made ambitious, and claim to found an objective morality on what common origins occurs in those explanations.

If the earlier view on babies is false, if we actually start off with at least some moral views (which might then be modulated by culture to the extent that we seem to have no commonality at all), and these keep at least some of their hold on us, we do seem to have a kind of universal morality.

We start life, not as moral blank slates, but pre-set to the attitude that certain things matter. Some facts and actions are evaluatively marked for us by our emotional reactions, and can be revealed by our earliest preferences. Preferences can be conditioned into almost any kind of state (eventhough some types of objects will always be better at evoking them), so its often hard to find this mutual ground for reconsiliation in adults and that is precisely why it’s such a splendid idea to do this sort of research on babies.

Psychopath College

6 maj 2010 | In Emotion theory Meta-ethics Moral Psychology Neuroscience Psychology Self-indulgence | Comments?What is wrong with psychopaths? Seriously? I’m not asking in a semi-mocking, Seinfield-esque ”what is the deal with X” kind of way. I’m seriously interested in finding out. Is there something they’re not getting, or something they don’t care about? And is caring about something really that different from understanding it? (In the Simpsons episode ”Lisa’s substitute” Homer, trying to comfort Lisa, memorably says ”Hey, just because I don’t care doesn’t mean I don’t understand”).

As most people interested in philosophy, I’ve been accused of being ”too rational” and, by implication, deficient in the feelings department. And, like most people interested in philosophy would, I’ve dealt with this accusation, not by throwing a tantrum, but by taking the argument apart. To the accusers face, if he/she sticks around long enough to hear it. When people tell me I’m a know-it-all, I start off on a ”This is why you’re wrong” list.

So, when it happens that someone compliments me on some human insight or displayed emotional sensitivity, I tend to make the in-poor-taste-sort of-joke ”Psychopath College can’t have been a complete waste of time and money, then”.

Psychopath College, you see, is a fictional institution (Aren’t they all? No.) that I’ve made up. It refers to the things you do when you don’t have the instincts or the normal emotional and behavioral reactions, but still want to fit in. You learn about them by careful observation, you try to find a rationale for them, a mechanism that will help you understand it. In the end, you manage to mimic normal behavior and make the right predictions. (Like all intellectuals, led by the editors of le monde diplomatique, I learned to ”care” about football during the 1998 world cup, not in the ”normal” way, but for, you know, pretentious reasons.)

It’s commonly believed that psychopaths ability to manipulate people depends on precisely this fact: they don’t rely on non-inferred keen instinct and intuition but actually need to possess the knowledge of what makes people behave and react the way they do. And this knowledge can be transferred into power, especially as psychopaths are not as betrayed by unmeditated emotional reactions as the rest of us are.

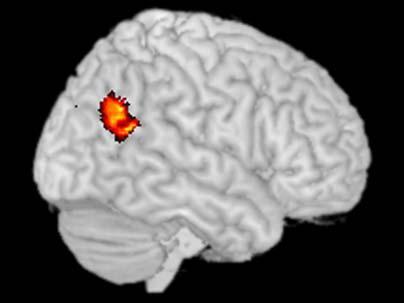

A recent study reported in the journal ”Psychiatry Research: Neuroimaging” told that psychopathic and non-psychopathic offenders performed equally well on a task judging what someone whose intentions where fulfilled, or non-fulfilled would feel. But when they do, different parts of the brain are more activated. In psychopaths, the attribution of emotions is associated with activity in the orbitofrontal cortex, believed to be concerned with outcome monitoring and attention. (This said, the authors admit that the role of the OFC in psychopathy is highly debated) In non-psychopaths, on the other hand, the attribution is rather correlated with the ”mirror-neuron system”. In short, psychopath don’t do emotional simulation, but rational calculation, and the successful ones reach the right conclusions.

The task described in the paper (”In psychopathic patients emotion attribution modulates activity in outcome-related brain areas”) is a very simple one, and offers no information on which ”method” performs better when the task is complex, or whether they may be optimal under different conditions.

Since knowing and caring about the emotional state of others is, arguably, at the heart of morality, studies like these are of the great interest and importance. What, and how, does psychopaths know about the emotional state of others? And might the reason that they don’t seem to care about it be that they know about it in a non-standard way? Jackson and Pettit argued in their minor classic of a paper Moral functionalism and moral motivation” that moral beliefs are normally motivating because they are normally emotional states. You can have a belief with the same content, but in a non-emotional, ”off-line” way, and then is seems possible not to care about morality. Arguably, this is what psychopaths do, when they seem to understand, but not to care.

As Blair et all (The psychopath) argues, one of the deficiencies associated with psychopathy is emotional learning. This makes perfect sense: if you learn about the feelings of others in a non-emotional way, you don’t get the kind of emphasis on the relevant that emotions usually convey. Since moral learning is arguably based on a long socialization process in which emotional cues plays a central part, no wonder if psychopaths end up deficient in that area.

What can Psychopath College accomplish by way of moving from knowing to caring? It is not that psychopaths doesn’t care about anything; they are usually fairly concerned with their own well-being, for instance. So the architecture for caring is in place, why can’t we bring it to bear on moral issues? Perhaps we can. Due to the emphasis on the anti-social in the psychopathy checklists, we might miss out on a large group of people that actually ”copes” with psychopathy and construes morality with independent means.

One thing that interests me with psychopaths, who clearly care about themselves and, I believe, care about being treated fairly and with respect is this: Why can’t they generalize their emotional reactions? This is highly relevant, seeing how a classic argument for generalising moral values when there is no relevant difference, at least from Mill, Sidgwick and memorably by Peter Singer, is held to be a pure requirement of rationality. The thought is that you establish what’s good by emotional experiences, and then you realise that if it’s good for me, there is no reason why the same experience would not be good for others as well. So the justification of generalisation is a rational one. But the mechanism by which this generalisation gets its force is probably not, and depends on successfull emotional simulation, a direct, non-considered emotional reactivity (then again, whether you manage to ”simulate” animals like slugs or, pace Nagel, bats, might be a matter of imagination, not rationality or emotionality).

So what does this possibility say about the epistemic status of our moral convictions, eh?

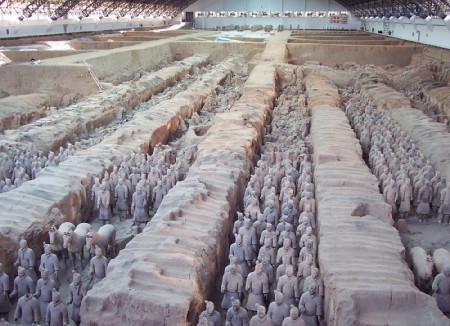

Reasons and Terracotta

3 maj 2010 | In Emotion theory Meta-ethics Moral Psychology Psychology Self-indulgence | Comments?

(Not friends of mine)

Terracotta, the material, makes me nauseous. Looking at it, or just hearing the word, makes me cringe. Touching it is out of the question. One may say that my reaction to Terracotta is quite irrational: I have no discernable reason for it. But rationality seems to have little to do with it – its not the sort of thing for which one has reasons. My aversion is something to be explained, not justified. It is not the kind of thing that a revealed lack of justification would have an effect on, and is thus different from most beliefs and at least some judgments.

The lack of reason for my Terracotta-aversion means that I don’t (and shouldn’t) try to persuade others to have the same sort of reactions Or, insofar as I do, it is pure prudential egotism, in order to make sure that I won’t encounter terracotta (aaargh, that word again!) when I go visit.

So here is this thing that reliably causes a negative reaction in me. For me, terracotta belongs to a significant, abhorrent, class. It partly overlaps with other significant classes like the cringe-worthy – the class of things for which there are reasons to react in a cringing way. The unproblematic subclass of this class refers to instrumental reasons: we should react aversely to things that are dangerous, poisonous, etc. for the sake of our wellbeing. But there might be a class of things that are just bad, full stop. They are intrinsically cringe-worthy, we might say. They merit the reaction. (It is still not intrinsically good that such cringings occur, though, even when they’re apt – the reaction is instrumental, even when its object is not).

Indeed, these things might be what the reactions are there for, in order to detect the intrinsically bad. Perhaps cringing, basically, represents badness. If we take a common version of the representational theory of perception as our model, the fact that there is a reliable mechanism between type of object and experience means that the experience represents that type of object.

Terracotta seems to be precisely the kind of thing that should not be included in such a class. But what is the difference between this case and other evaluative ”opinions” (I wouldn’t say that mine for Terracotta is an opinion although I sometimes have felt the need to convert it into one), those that track proper values? Mine towards terracotta is systematic and resistent enough to be more than a whim, or even a prejudice, but it doesn’t suffice to make terracotta intrinsically bad. Is it that it is just mine? It would seem that if everyone had it, this would be a reason to abolish the material but it wouldn’t be the material’s ”fault”, as it where. Is terracotta intrinsically bad for me?

How many of our emotional reactions should be discarded (though respected. Seriously, don’t give me terracotta) on the basis of their irrelevant origins? If the reaction isn’t based on reason, does that mean that reason cannot be used to discard it? This might be what distinguishes value-basing/constituting emotional reactions from ”mere” unpleasant emotional reactions . Proper values would simply be this – the domain of emotional reactions that can be reasoned with.

Looking for your inner child?

2 april 2010 | In Moral Psychology Neuroscience | Comments?

In a paper published in PNAS a few days ago (Disruption of the right temporoparietal junction with transcranial magnetic stimulation reduces the role of beliefs in moral judgments), a fairly interesting experiment was reported by authors Young, Camprodon, Hauser, Pascual-Leone and Saxe: By using transcranial magnetic stimulation (TMS) to disrupt neural activity in the right temporoparietal junction (RTPJ), they managed to influence participants moral judgments in a revealing manner. Normally, we judge the moral status of an action to be dependent on the mental state (belief/intention) of the agent. But disrupting this particular area (on independent grounds believed to be responsible for judging mental states of others, changed this, and the moral judgment of the action tended to become more a matter of judging the effect of the action. In this case: trying to poison someone, but failing was not condemned as impermissible, since the would-be-victim was, in the end, alright. The moral judgment was given on a questionnaire and on a scale from 1 (forbidden) to 9 (permissible).

Belief attribution is an integral part of normal, grown-up, moral judgments. To disregard the intention and focus solely on the result in every kind of moral judgment is, however, often the moral strategy of children up to the age of six.

What’s exciting about this? Initially? Not much that wasn’t fairly common sense to begin with: if you disrupt an area of the brain responsible for judging intentions, then you disregard intention when forming your moral judgment. What’s noteworthy in is that people keep making what they take to be moral judgments, even when this arguably central feature of moral judgments goes missing.

Note that the scale used is whether the act is forbidden or permissible, not whether it is good or bad. There are difficulties here, and the authors does not justify their choice of words. Presumably, it should be forbidden to intend, and try, to poison someone because trying sometimes lead to success, people get poisoned and that’s bad. But that’s not exactly the question posed in this experiment – it seems to be whether it’s permissible to intend to poison someone and fail. ”No harm” as someone so fittingly put it ”no foul”. That’s hardly being immoral, it’s rather being attuned to the artificial nature of the scenario.

Unfortunately, the paper does not say whether the participants where also asked to justify their judgment. This is clearly a relevant thing to study here: both while still under the influence of the TMS, and afterwards. Would they find a way to morally justify their judgment, however far fetched (as would perhaps be suspected, by the kind of post-hoc justification explored by Jonathan Haidt), or would they state that they must have been temporarily insane.

While not exactly a groundbreaking piece of work, and there are questions left open, it is certainly the kind of gentle probing of the brain we should be doing to find out how it works its way around morals.

What did you Quine today?

25 januari 2010 | In Meta-ethics Meta-philosophy Moral Psychology Naturalism | Comments?”Does it contain any experimental reasoning, concerning matter of fact and existence?” – David Hume

In last weeks installment of the notorious radio show that I’ve haunted recently, I spoke to the lovely lady on my left on the picture below about the use of empirical methods in moral philosophy. The ”use of empirical methods” of which I speak so fondly is, on my part, constricted to reading what other people has written, complaining about the experiments that haven’t been done yet, and then to speculate on the result I believe those experiments (not yet designed) would yield.

Anyway: I have a general interest in experimental philosophy, but I haven’t signed anything yet, you know what I mean? That is: I don’t think (what the host of the radio show wanted me to say) that ”pure” armchair philosophy is uninteresting. Indeed, I believe that any self-respecting empirical scientist ought to spend at least some time in the metaphorical armchair, or nothing good, not even data, can come out of the process.

When coming across a philosophically interesting subject matter (and, let’s face it, they’re all philosophically interesting, if you just stare at them long enough. Much of our discipline is like saying the word ”spoon” over and over again until it seems to loose its meaning, only to regain it through strenuous conceptual work) I often find it relevant to ask ”what happens in the brain”? What are we doing with the concept? It is obviously not all that matters, but it seems to matter a little. Especially when we disagree about how to analyze a concept, there might be something we agree on. Notoriously, with regard to morality, we can disagree as much as we like about the analysis of moral concepts, but agree on what to do, and on what to expect from someone who employs a moral concept, no matter what here meta-ethical stance. Then, surely, we agree on something and armchair reasoning just isn’t the method to coax it out.

I try to be careful to emphasize that empirical science is relevant to value-theory, according to my view, given a certain meta-ethical outlook. Given a particular way to treat concepts. If we treat value as a scientific problem, what can be explained. Since there is no consensus on value, we might as well try this method. Whether we should or not is not something we can assess in advance, before we have seen what explanatory powers the theory comes up with.

Treating ”value” as something to find out about, employing all knowledge we can gather about the processes surrounding evaluation etc. is, in effect, to ”Quine” it. It seems people don’t Quine things anymore, or rather: that people don’t acknowledge that this is what they’re doing. To Quine something is not the same as to operationalize it, i.e. to stipulate a function for the concept under investigation, and to say that from now on, I’m studying this. To Quine it is to take into consideration what functions are being performed, which have some claim to be relevant to the role played by the concept, and to ask what would be lost, or gained, if we were to accept one of these functions as capturing the ”essence” of it. It is to ask a lot of round about questions about how the concept is used, what processes influence that use and so on, and to use this as data to be accounted for by an acceptable theory of it.

A Lamp, David Brax (yours truly) and Birgitta Forsman (I cannot speak for her, but I’m sure she likes you to). The lamp did not volunteer any opinions on the subject matter, but has offered to participate in a show on a certain development in 1800-century philosophy. Foto: Thomas Lunderqvist

when does it get interesting?

17 december 2009 | In Moral Psychology parenting Self-indulgence | 2 CommentsWe just returned home with our newborn child, who shall remain nameless (but not for long). And, in a very Carrie-like trope indeed: it got me thinking. (Carrie of New York single life fame, not the gym-hall massacre one. Or the one hilariously falling over in the opening credits of ”little house on the praire”): At what age does a human being get interesting? Every now and then I come across opinions, and decided preferences (and you know those interest me no end), in this matter. Most frequently the (faux)controversial opinion is that kids are tedious and uninteresting at least up until the point they develop thoroughly thought through political views and a natural distaste for their parents. Also, they should be able to help you with your computer.

Others require less. Capacity for speech, for instance, and some creative thinking/acting to boot. This preference is guided by wanting a human being to be treatable as an equal, and the relevant equality is one of thought, rather than of bowel movement or need for constant attention. Four-year olds will do for these people. There is a trend in moral psychology to make a big deal out of the stage where kids start to distinguish conventional from non-conventional wrongs, which is usually a bit earlier still and that certainly is an interesting age.

Infants, on the other hand, are often perceived as poop/sleep/crying machines that have the mild added value of smelling quite nice. (Young, single intellectuals – the group I’ve spent the larger portion my life belonging to, and thus have some inside knowledge of – have a hard time realising how they could possibly enjoy the company of, and thus care for, people of this miniscule sort.) They may be cute, and they may evoke some positive emotions, but interesting? Not as such.

I beg to differ (from the people I just kind of made up). Infants exhibit complex behaviors, and while it might seem random beyond the bare essentials, learning does takes place. The study of infants needs to take into account what they actually control. One particular interesting study used the baby’s ability to change the speed with which it sucked on a pacifier to reveal its preferences. We don’t need to assume fullblown intentions in order to identify interesting behaviors and the beginning of individual differences. To me, at least, the Little One has been interesting from the start.

Now, about this response-dependency thing

3 december 2009 | In Emotion theory Meta-ethics Moral Psychology Naturalism | Comments?I am a fan of keeping options left open, and of not leaving open options left undeveloped. When we find ourselves with conflicting intuitions in situations where intuitiois our only ground for theoretical decisions, it is basically an act of charity to develop a theoretical option anyway, in case someone will find it in their heart to – as we so endearingly say in philosophy – entertain the proposition. (My attitude here, you might have noticed, is a bit counter to my lament about a certain trope in the post below.)

Store that in some cognitive pocket (memory, David, it’s called ’memory’ ) for the duration of this post.

Response-dependency. Some concepts, and some properties, are response-dependent. That means that the analysis of the concept, and the nature of the property, is at least partly made up by some response. To be scary, for instance, is to have a tendency to cause a fear-response. There is nothing else that scary things have in common. Things are different with the concept of danger: Dangerous things are usually scary too, but that is not their essence. Their essence consist in the threat they pose to something we care about, or should care about. Fear is usually a reasonable response to danger; fear is usually how we detect it. Danger might still be response-dependent, but then fear is not the crucial response.

Response-dependency accounts have been developed for many things. Quite sensibly for notions such as being disgusting. Famously by Hume for aesthetic value. And arguably first and foremost under the name ”secondary qualities” by Locke, and unceasingly since by other philosophers, for colours. Morality, too, has been judged response-dependent , and a great many things have been written about whether this amounts to relativism or not, and whether that would be a point against it.

Response-dependency accounts of value and of moral properties has a lot going for it. Famously, beliefs about moral properties are supposed to involve some essential engagement of our motivational capacities. And if the relevant property is one our knowledge of which is dependent on some motivational response, say an emotion, this we’re all set. Further, if these concepts/properties are response dependent, it would account for many instances of moral disagreements – we disagree on moral issues when our moral responses differ, and when the difference is not accountable by a difference in other factual beliefs and perceptions. If we accept that responses are all there is to moral issues, we might have to learn to live with the existence of some fundamental disagreements between conflicting responses, and moral views. Relativism follows if there is nothing that moral respones (for the most part, the moral response involved is some kind of emotion) track. What the account gives us is a common source of evaluative meaning in located in the fact that we all share the same basic type of responses. We just disagree in what causes those responses, and about what objects merit the response.

If we insist on locating the value (moral or otherwise) in the object/cause of the response (note that the object and the cause might be different things – we might project an emotion of something that did not cause it. This happens all the time), the response-dependency account results in a form of moral relativism. If one finds relativism objectionable, and there is no way to provide firm moral properties in the cause/object structure of typical moral responses, one might therefore want to reject response-dependency wholesale. I think this is mistake. If we agree that there is such a thing as a moral/evaluative response, and this response is something that all conceptually competent evaluators have in common, we have our common ground right there, in the response. It is not the kind of relativism where we find that seemingly disagreeing parties are actually speaking about different things altogether. In fact, there is a common core evaluative meaning, and that meaning is provided by the relevant response.

So, to the suggestion then, our theoretical option left open for development: that moral/whatever value is in the response, not in the object of the response. This seems to be the obvious solution once we’ve established that the value is metaphysically dependent on the response, and there is no commonality to what causes the response. If the responses themselves can is something that seemvaluable, and emotions usually do, we should develop that option, and disregard the fact that we tend to project value to the object of mental states. (If we keep on, as I do, and argue that the evaluative component of any emotion consists in it valence, and valence is cashed out in terms of pleasantness – unpleasantness, we have a kind of hedonism at our hands, but this option is open for any response you like).

Nothing is metaphysically more response-dependent than the responses themselves, and yet, this move avoids any objectionable form of relativism, while explaining the appearance of relativism. And, given that the response is motivationally potent, we have an inside track to the motivational power of moral/evaluative properties/beliefs. This, I’d say, makes it a theoretical option worth pursuing.

Lecturing in the shower

30 oktober 2009 | In Meta-philosophy Moral Psychology Self-indulgence | Comments?I don’t sing in the shower. It’s not that I don’t sing Period, I will join in when people let me and have even been known to attempt the occasional added fifth or fourth or thereabouts. It’s just not a shower thing. I do, however, prepare lectures in that setting. Trying out how they sound and so on. Thinking is usually a rather unstructured, associative affair, and even writing these post-typewriter days has lost some of it’s definitiveness (as these rather freefloating associations of mine amply demonstrate), but Thinking Out Loud helps getting the clarity and simplicity required for getting the ideas across. Just ask the neighbors in the poorly soundproofed apartment next to ours.

Duelling Processes

6 oktober 2009 | In Meta-ethics Moral Psychology | Comments?On Thursday, I’m supposed to summarize and criticize a draft for a paper called ”the Methods of Ethics” (yet contains no reference to Sidgwick) with the subtle subtitle ”conflicts built to last”.

The paper (intersting and very nicely written) reflects on the philosophical importance of the ”dual process” model for moral judgments and points out that having two processes influencing our moral judgments is useful since each process can correct the mistake that the other leads to on its own. In vague and possibly misleading terms, the ”emotional” process is in place to make sure that the ”cognitive” process does not run away with us (getting us stuck in prisoners dilemmas and repugnant conclusions. Also, one presumes, to keep us from making biased calculations), the cognitive process is there to make sure we don’t get stuck in emotionally reinforced prejudices.

One important role for the emotional process is to tilt the actual and perceived utilities of an option so that, for instance, a seemingly rational breach of promise becomes less tempting, due to expected guilt. (It’s a choice-archictural device, to speak with the nudge people.) The two processes are commonly held to be representative of two different moral theories. The cognitive process is broadly ”utilitarian”, and the emotional is broadly ”deontological”, spurring some philosophers to say that the dual process model is a perfect diagnosis of the history of moral philosophy. The relation between the two processes, then, is essentially a conflictual one.

It’s an interesting speculation, but there are complications.

First: there might be several decision procedures at play; the ”dualism” is merely a handy simplification with a long history, reaching us from Plato via Hume, and Jane Austen, for crying out loud.

Second: there is no reason to think that the cognitive process as such is utilitarian – there are other reasoned ways to reach a moral decision.(The empirical work on these issues is just getting started). Nor is there any reason to believe that our emotions are essentially deontological, especially seeing how our emotional systems are among the most complicated, interconnected and plastic in the brain. One can easily imagine an agent whose emotions are always targeting the utilitarian option, who is an intellectually convinced deontologist, for instance.

Third: even if our deliberation can occasionally go against our emotional reactions, what that deliberation is about, what consequences to take into account, has to be established somehow, and emotions certainly play a central role in that process.

I believe it is a mistake to see these processes as being essentially in conflict, rather than in cooperation. We are probably best of when we get the same verdict from both processes (which, admittedly, might require some negotiation) and we usually get into trouble when they don’t.

The paper proposes that the take home lesson from these findings is that we should not be that concerned with reaching the moral truth, but with reasoning correctly, i.e. using both processes. But if we have no moral truth to strive towards, or to use as a corrective tool, how do we know when we have reasoned correctly? Especially seeing how the outcome of each process depends on a number of contingent circumstances. There are infinite possibilities when it comes to what sort of a balance is struck between the two processes, so are they all equally valid?

It’s noteworthy that when considering the Prisoners Dilemma case, the way the author determine that emotional processess help us reach the right decision is by showing that it leads to the best overall consequences. It is good that we have two processes, but that goodness must somehow be assessed, and that calls for one criterion of rightness, not several.