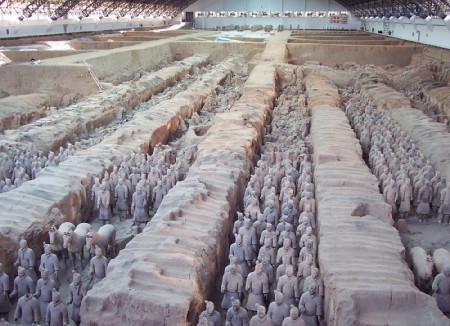

Reasons and Terracotta

3 maj 2010 | In Emotion theory Meta-ethics Moral Psychology Psychology Self-indulgence | Comments?

(Not friends of mine)

Terracotta, the material, makes me nauseous. Looking at it, or just hearing the word, makes me cringe. Touching it is out of the question. One may say that my reaction to Terracotta is quite irrational: I have no discernable reason for it. But rationality seems to have little to do with it – its not the sort of thing for which one has reasons. My aversion is something to be explained, not justified. It is not the kind of thing that a revealed lack of justification would have an effect on, and is thus different from most beliefs and at least some judgments.

The lack of reason for my Terracotta-aversion means that I don’t (and shouldn’t) try to persuade others to have the same sort of reactions Or, insofar as I do, it is pure prudential egotism, in order to make sure that I won’t encounter terracotta (aaargh, that word again!) when I go visit.

So here is this thing that reliably causes a negative reaction in me. For me, terracotta belongs to a significant, abhorrent, class. It partly overlaps with other significant classes like the cringe-worthy – the class of things for which there are reasons to react in a cringing way. The unproblematic subclass of this class refers to instrumental reasons: we should react aversely to things that are dangerous, poisonous, etc. for the sake of our wellbeing. But there might be a class of things that are just bad, full stop. They are intrinsically cringe-worthy, we might say. They merit the reaction. (It is still not intrinsically good that such cringings occur, though, even when they’re apt – the reaction is instrumental, even when its object is not).

Indeed, these things might be what the reactions are there for, in order to detect the intrinsically bad. Perhaps cringing, basically, represents badness. If we take a common version of the representational theory of perception as our model, the fact that there is a reliable mechanism between type of object and experience means that the experience represents that type of object.

Terracotta seems to be precisely the kind of thing that should not be included in such a class. But what is the difference between this case and other evaluative ”opinions” (I wouldn’t say that mine for Terracotta is an opinion although I sometimes have felt the need to convert it into one), those that track proper values? Mine towards terracotta is systematic and resistent enough to be more than a whim, or even a prejudice, but it doesn’t suffice to make terracotta intrinsically bad. Is it that it is just mine? It would seem that if everyone had it, this would be a reason to abolish the material but it wouldn’t be the material’s ”fault”, as it where. Is terracotta intrinsically bad for me?

How many of our emotional reactions should be discarded (though respected. Seriously, don’t give me terracotta) on the basis of their irrelevant origins? If the reaction isn’t based on reason, does that mean that reason cannot be used to discard it? This might be what distinguishes value-basing/constituting emotional reactions from ”mere” unpleasant emotional reactions . Proper values would simply be this – the domain of emotional reactions that can be reasoned with.

The baby critic

15 april 2010 | In Books Comedy media parenting Psychology Self-indulgence TV | 3 Comments Through the looking glass, okay?

Through the looking glass, okay?

A few months back, to the great amusement of late night talkshows (US) and topical comedy quiz participiants (UK), a group of scientists lodged a complaint against a trend in current cinematic science fiction: It’s not realistic enough. The sciency part of it is not good enough. Science fiction stories should help themselves to only one major transgression against the laws of physics, argued Sidney Perkowitz. To exceed this limit is just lazy story-telling – time travel being a bit like the current french monarch in most Molieré plays. The best works of science fiction follows that almost experimental formulai: change only one parameter and see how the story unravels.

The criticism that started already in the first season of ”Lost” and has become louder ever since was precisely this: the writers clearly have no idea what they’re on about, they haven’t even decided which rules of physics they have altered. The viewer is constantly denied the pleasure of running ahead with the consequences of the changed premise and then watch how the story runs its logical course. Off course, a writer may add surprises, there is pleasure in that to, but you cannot constantly change the rules without adding a rationale for that change. That’s just cheating (or its playing a different game altogether. That is acceptable, of course, I’m not saying it isn’t, I just think this accounts for a lot of the frustration people experience with shows like ”Lost” or ”Heroes”).

The comedians who ridicule the scientist claim that the latter miss the point: Science fiction is suppose to be fiction. But in fact the point is that even fiction, at least good fiction, is not arbitrary.

It struck me that the point made by this group of scientists is very much the reaction that kids have when you break the rules in their pretend play. (There’s an excellent account of this in the opening chapters of Alison Gopniks book ”The philosophical baby”).

One of the interesting things about kids is their ability to, and interest in, pretend play. They are from a very early age able to follow, or to make up, counterfactual stories and imaginary friends and foes, and the stories that play out have a sort of logic. If you spill pretend tea, you leave a mess that needs to be pretend-mopped up. Many psychologists now argue that this is more or less the point of pretend play: you work out what would happen if something, that does in fact not happen, were to happen. The more outlandish the countered fact, the more work you need to put in to draw the right, or sensible, conclusions, and the more adept you become at reasoning, planning and coming up with great ideas. Stories that doesn’t further that project might be nice nevertheless: literature has other functions, after all. But the decline in this particular quality in current science fiction is still a sound basis for criticism. Even a baby can see that.

A unique set of influences

14 april 2010 | In Books parenting Psychology Self-indulgence | Comments?In one of the early notebooks in which I used to put the kind of thought, rants and musing that nowadays makes it into this blogish existence I made some sort of remark about how to overcome the anxiety of influence; the suspicion that all ones work is somehow derivative. ”One can at least aspire” I wrote (or something like that, I obviously didn’t bother to actually find the thing. It’s a notebook, for crying out loud. It doesn’t even have a ”search” function) ”One can at least aspire to be the result of a unique set of influences”. In other words: it doesn’t much matter whether one is little less then the effect of what one has read, seen, heard etc. since the longevity of life in the plastic state makes sure that some originality will ensue even from that process. In addition: to track down the complete set of sources that ”made” a particular author/thinker is excellent fun. One can even toy with that sort of thing in ones writings, provide hints and such (misleading ones, if one wants to be clever).

Anyway, I’m going somewhere with this. Oh, yes: I find that most things I write in hindsight quite clearly is the result of what I was interested in at the time, even when those things were not obviously related to begin with. Thus, for instance, it is highly unlikely that my dissertation would have gone down the way it did, were it not for the fact that I happened to be into cognitive science just before I got the job (much to the dismay of my supervisors). The sort of value theory I was into before that was much more of a dry, conceptual analysis kind of thing.

So I’m pretty sure that something interesting will come from my current preoccupation with the two subjects of Psychopathy and child (infant, actually) psychology. It’s not hard to find a link, obviously: developmental processes are key in both areas, but I’m very likely to make a big point out of this, merely for the reason that these are the things that interests me now.

For instance: one current trend in chid psychology is to stress the wide, undiscriminating attention of infant and toddler (more of a lamp, than a spotlight) which make them better than adults at noticing task-irrelevant features. Psychopaths, according to another book I’m reading, are quite the opposite: one of the cognitive peculiarities of psychopath is their ability to focus, and their inability to remember task-irrelevant features. As pointed out in the previous post, attention may suffer when the amount of information increases, but the reverse is true as well. The inability to shift attention when previously irrelevant information becomes relevant, or shows you that a shift is needed, is clearly a problem in a variable environment, such as our, social one. Infants are in the process of finding out what is relevant, and thus need not to focus attention just yet.

My third current interest is in the cognitive science of literature. I’m likely to find a way to make that relevant to the project as well.

(Currently reading)

Art as Play

5 april 2010 | In Books Self-indulgence | Comments?

”I suggest that we can view art as a kind of cognitive play, the set of activities designed to engage human attention through their appeal to our preference for inferentially rich and therefore patterned information”

Brian Boyd: On the Origin of Stories – Evolution, cognition, and fiction

I’ve written precisely one text about aesthetics, and used as a kind of motto this quote from Graham Greene’s (wonderful) novel Travels with my aunt: ”Sometimes I have an awful feeling that I am the only one left anywhere who finds any fun in life”. I’m reading Brian Boyd’s ”On the origin of stories” and the feeling is slowly subsiding.

Suddenly Susan

30 mars 2010 | In Books Meta-philosophy Psychology Self-indulgence | 2 Comments

First of all: I like Susan Blackmore. In fact, I met her once, at the first proper conference I ever attended (the ”Toward a Science of Consciousness” conference in Tuscon 2004 hosted by David ”madman at the helm” Chalmers). She came and sat next to me during the introductory speech and asked me what had just been said. I said I hadn’t payed that much attention, to be honest, but I seemed to remember a name being uttered. We then proceeded to reconstruct the message and ended up having a short, exciting discussion about sensory memory traces. From now on, I remember thinking (having to dig deeper than just in the sensory memory traces, which will all have evaporated by now), this is what life will be like from now on. It hasn’t, quite.

ANYWAY: So I like Susan Blackmore, but today, I’m using her to set an example.

I recently had occasion to read her very short introduction to consciousness in which she take us through the main issues and peccadilloes in and of consciousness research. One of the sections deals with change blindness and she describes one of the funniest experiments ever devised: The experimenter approach a pedestrian (this is at Cornell, for all of you looking to make a cheap point at a talk) and asks for directions. Then two assistants, dressing the part, walks between the experimenter and the pedestrian carrying a door. The experimenter grabs the back end of the door and wanders off, leaving the pedestrian facing one of the assistants instead. And here’s the thing: only 50% of the subjects notice the switch. The other 50% keeps on giving direction to the freshly arrived person, as if nothing has happened.

This is a wonderful illustration of change blindness, and it’s a great conversation piece. You can go ahead and use it to illustrate almost any point you like, but here comes the problem: there is a tendency to overstate the case, especially among philosophers (I’m very much prone to this sort of misuse myself), due to the fact that we usually don’t know, or don’t care much, about statistics. Blackmore ends the section in the following manner:

When people are asked whether they think they would detect such a change they are convinced that they would – but they are wrong.

We have a surprising effect: people don’t notice a change that should be apparent, and as a result you can catch people having faulty assumptions about their own abilities, and no greater fun is to be had anywhere in life. But Blackmore makes a mistake here: People would not be wrong. Only 50% of them would. It’s not even a case of ”odds are, they are wrong”.

I would use this as an example of some other cognitive bias – something to do with our tendency to remember only the exciting bit of a story and then run with it, perhaps – only I’m afraid of committing the same mistake myself.

(Btw: I also considered naming this post ”so Sue me”)

The Heuristic in the Bias

21 februari 2010 | In Self-indulgence | Comments?In the art of annoying people with science, nothing is as effective as pointing out cognitive biases. Bringing out the Confirmation Bias in particular is unlikely to endear you to friends and colleges. But you usually get away with the point – there is almost always more research to be done – unless someone figures out that you already came equipped with the idea that your opponent would use the confirmation bias, and then choose the evidence that seemed to confirm that idea. The discussion that follows can take up a substantial part of the seminar, and effectively hide the fact that you haven’t done the required reading. Do try this at home.

To be caught exhibiting any kind of bias is usually held to be a bad thing, not only in science. But, as Kahneman, Tversky and Slovic (among others) points out: biases are heuristics. The are usually very useful indeed. It is in the nature of a bias/heuristics that it may lead us astray, but practically any epistemic strategy or habit is bound to lead astray in some cases. We usually solve this by having other strategies to keep the first in check. And so on. Peer-review is one such strategy, not fool-proof. Democracy might spring to mind, to.

The term ”confirmation bias” was coined, I believe, by the psychologist Peter Wason, but the notion is way older than that. My favorite wording comes from Laurence Sterne’s eternally ahead of its, and any, time novel ”Tristram Shandy”:

It is the nature of a hypothesis, when once a man has conceived it, that it assimilates everything to itself, as proper nourishment; and, from the first moment of your begetting it, it generally grows the stronger by everything you see, hear, read, or understand. This is of great use.

”This is of great use”. I used this paragraph as one of many mottoes for the second part of my dissertation. I certainly did pick the evidence that seemed to confirm my theory (that pleasure and value are very closely related indeed). But the point is that I did not conceive of a theory, derive the consequences and then do the researach. The project was much more preliminary than that (still is). I wanted to find out what, if anything, hedonism was true about. What are the facts, and can they be put together in a coherent, sense-making way to form a theory, still recognisable as ”hedonism”? At this stage, at least, confirmation bias is a very useful strategy.

If you like it maybe you should write a dissertation about it?

17 februari 2010 | In Self-indulgence | 2 CommentsI’m mostly useless at cocktail-parties. To engage, exchange pleasantries, gossip and general observations and then move on goes against every instinct I have. As a sort of debutante, I thought that my role at social occasions should be that of the salon-communist – to provoke, insult and amuse the other guests with my wild and controversial ideas, ultimately with the function to reassure them that the company they kept was a socially and intellectually diverse one, and that they were very tolerant indeed. I should be endured. I was not.

Unwilling to change to pleasant observations about trivial matters my next, and current, strategy was to identify special interests. Their ”Geekdoms”, in a word. People, I decided, usually have some obscure special interest that they long to talk about at length, but believe will bore others. I believed that people’s most interesting ideas and complex reasoning moved around these special interests. I also believed that when no one wanted to talk about what I wanted to talk about, my best bet was to identify what interested them in the same way that my interests interested me. I was starting work on my dissertation, and wanted to identify what others would write their dissertation about, if they were given the opportunity. The idea was that everyone has at least one dissertation, as well as a novel, in them.

Always in need of over-justification, I had the further idea that bringing out these special interest and showing that they could be used to ones advantage in a social context would help keep these interests alive. Insofar as people grow boring, it is because they haven’t been encouraged to stay interesting. The social ineptitude associated with the nerd has always struck me as a terrible mistake, based on the inability to cope with knowledge. Knowledge management should be as much of a thing as Anger management is.

How do I get happy?

4 februari 2010 | In Emotion theory Happiness research Psychology Self-indulgence | 1 CommentIf you want to sell a book about happiness research/positive psychology, or anything even remotely related to that area, you better be prepared to answer this question. Or at least claim that you are, and then subtly change the subject and hope that no-one notices.

Basically, the answer is this: find out what happiness is, and then, you know, go get that. .

Sometimes when you want something, the best strategy is to find out how people who got it behave, and copy that behavior. This works reasonably well for things like getting in shape, making a bargain, learning how to ride a bike, etc. It does not work as well if what you want is to be tall. It doesn’t help to copy the behavior of tall people, or to wear their clothes or go on the rides only they may ride at the amusement park. If you want to be as good a writer as Oscar Wilde was, to copy every word Oscar Wilde wrote won’t exactly do it (That is not to say that it wouldn’t do anything, I’m sure there are worse ways of learning the style).

Just copying behavior statistically demonstrated to be exhibited by happy people is probably not the best idea (that would be Cargo Cult Science): If we are to learn from the habits of others, we better learn how to generalize correctly, and in order to do that, we need to understand how happiness works. In order to be happy, it’s probably not sufficient to get the things/habits/relations that happy people got. An educated guess is that the relevant factor is that they got things/habits/relations that they like, and so should you. Or you should like the things you’ve already got. Whichever is most convenient.

Parachuters may be the happiest people alive, but the excitement of jumping might upset, bore or kill you. You should do the things that does for you, what parachute-jumping does for them. And note that even ”excitement” might be a wrongly generalized category: maybe excitement is not for you. Maybe you’re a sofa kind of person. It might still be possible for you to be a person for whom excitement or even parachute-jumping is rewarding, but that requires a completely different kind of neural rewiring.

Possibly, what happy people got is the disposition to like what they get, or to find something to like in everything they get and that disposition, rather than the things that they, or you, like, is what you should get. Of course, if you are disposed to like everything, you might stop and appreciate the glorious spectacle of a runaway train moving towards you at speed, and that, you know, would be bad. You shouldn’t have that disposition. Further qualifications are needed, for strategic and individual reasons. This is why we should be very careful when we try to translate science into advice.

As to the neural rewiring: reading about happiness-research might bring about some of the required changes. Learning does occasionally occur as a consequence of reading, after all. But it is likely to do as much good for your happiness as a class on Newtonian mechanics would do for your billiard-playing skills.

What Modesty Forbids

31 januari 2010 | In Books Hedonism Self-indulgence | Comments?I’m sure every reader has his/her way of working her/his way through a book or paper with the help of a pen, underlining and making notes in the margin. The ”notes in the margin”, for me, has settled on a quite restricted number of expressions. There’s ”qb”, of course, for ”question begging”, there is the exclamation mark (which I hardly ever use otherwise) for remarkable statements, there are shorthands for missing premisses, spurious reasonings. and so on. There is the occasional ”good point”, when something strikes me as being just that. And the ”Exactly”, when someone makes a good point with which I agree. Finally, the ”Exactly. Damn it”, when the point is good, I agree, and it is so essential to my own argument that I curse the fact that someone else got to publish it first. (These things tend to happen when your views are true and interesting.)

This happened to me constantly while reading Leonard D Katz’ absolutely superb dissertation ”Hedonism as metaphysics of mind and value” (and yes, my title is a bit of a hommage). In fact, I might as well have put a sticker with ”Exactly. Damn it” on the cover. (His practically book-length on pleasure in the Stanford Encyclopedia of Philosophy is simply amazing, without doubt the best piece of philosophical writing there is on the subject, and the fact that things still get written about pleasure without reference to either of those two texts is nothing short of a scandal. (Stop it David, you are getting all worked up and excited, and that’s a shame).)

And now it happened again, in the work of Sharon A Hewitt. The most striking resemblance of my view and hers is our claim that goodness and badness are basically phenomenal properties: the experiences of pleasure and displeasure. ”Feeling good” is, in fact precisely that: having that feeling which goodness consists in. I would like to praise her work, because it is really quite brilliant, but it seems to me that modesty forbids it. We have not been in contact while working on our respective dissertations, so either there is a common source (and Katz’ work might very well be it. That, or C.I. Lewis’ ”An analysis of knowledge and valuation”), or we have some sort of snail-telegraph thing going.

Stop reading, start writing

9 januari 2010 | In BBC Books Meta-philosophy Self-indulgence | Comments?My first all too serious philosophical essay was on Heidegger (well, actually, I did a number on the ”positionality” concept in the work of Sartre earlier still, but it would take an insane amount of scholarly obsession for anyone to ever dig that up). The nicest thing said about was that it is ”not as incomprehensible as these things usually are”. The literature I discussed, I found at the University Library, actually going through a number of philosophy journals. I had a computer at the time, which was just barely hooked up to the internet, but didn’t use it for literature searches, just for writing and the occasional email. I spent a lot of time thinking about the subject of my essay, and used a very limited amount of sources.

A year or so later, while working on a different essay, I discovered JSTOR, and for about a month and a half, the printer didn’t get a rest. It suddenly dawned on me that everything interesting had been written about, at length, from almost every perspective, and the goal to find a theoretical position that was not currently occupied, and then to occupy it, suddenly struck me as much more difficult than I’d imagined. I spent the next few years reading more, too much probably, and thinking and writing less.

I used to do all my best thinking during walks and while running (or derogatorily: ”jogging”). Usually in very dull environments, not to distract from the thinking. Then I got an iPod, and started to listen to lectures, podcasts and audiobooks during those walks and runnings. (iTunes university has some great stuff, the podcasts from Nature, and from TED and the RSA are excellent. BBC 4’s ”thinking allowed” and ”in our time” just have me in stitches). And instead of thinking about what I’ve just heard, I tended to listen to another lecture, podcast or audiobook. Similarly with papers, even books. Before I start working on this chapter, I argued, I just need to read this paper, or that book. One wouldn’t like to be caught out ignorant, now, would one? No, one would not.

The all too great availability of other people’s writing and thinking made me quite heavy on the consumer side of science and philosophy, and much less of a producer. It is, of course, a great thing to learn, and to listen, but in order to become a philosopher, it is necessary to start doing it for yourself. To actually not care, for a bit, whether someone has written that same thing before, and been more well read while doing so.

My dissertation took longer than it should have, and I know people who have been, and still are, in that state where they just can’t seem to finish their texts. Partly, I believe, for this reason. They are excellent, well-read consumers and thoughtful, accomplished critics, but seems almost to have forgotten how to actually do philosophy. (The dominance of ”critical” philosophy among published articles is a testament that this tendency is very common indeed). The kind of second-order thinking were you are constantly reflecting on how what you are writing relates to what other people have written tends to stand in the way of confident, genuinely original and interesting work. At some point, you just have to get out of reading mode, and enter writing mode.